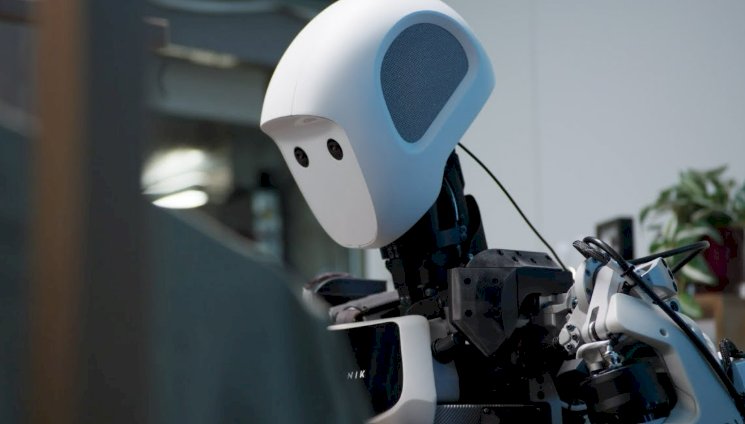

Google DeepMind has unveiled Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, bringing a leap forward in how robots reason, plan, and act in the physical world.

What’s new and why it matters

-

From single commands to multi-step reasoning

Unlike prior systems that could execute only one instruction at a time, these new models allow robots to think ahead and break down tasks. For example, sorting laundry is no longer “pick up a shirt,” but “decide which bin each item goes in, pick each up, move carefully, avoid collisions, etc.” -

Vision-language-action architecture

The setup connects visual perception, natural language understanding, and physical action. Gemini Robotics-ER handles embodied reasoning (thinking about the physical world and planning), and Gemini Robotics 1.5 handles visual and action tasks. This coupling allows robots to sense, reason, and act more coherently. -

Motion transfer across robot types

A striking capability is that what one robot learns can be transferred to others. For instance, a motion learned on a bi-arm robot might be usable by a humanoid arm robot. This helps scale learning across different hardware platforms. -

Safety, transparency, and “thinking before doing”

The system doesn’t just react; it builds intermediate plans in natural language, reasons about possible outcomes, then carries out motions. It also includes safety reasoning (e.g. collision avoidance) and explains its own decision logic to some extent.

Challenges & context

-

Still limited in dexterity & fine manipulation

While the system can plan and reason, robots still struggle with delicate object handling, fine motor skills, and unpredictable environments. -

Learning by observation is hard

It’s difficult to scale learning purely from watching humans or robots; training data for such rich interactions are limited. These models depend heavily on curated datasets. -

Ethical, safety, and robustness considerations

Deploying robots with reasoning ability in human environments raises issues: how do we ensure they don’t make harmful mistakes, how transparent must their “thinking” be, how do we manage failures? DeepMind has incorporated “safety policies” and is developing benchmarks (like ASIMOV) to evaluate safety. -

Robots are rapidly multiplying in factories

This comes against the backdrop of surging industrial robot demand: in 2024, some 542,000 industrial robots were newly installed — more than double what was installed ten years ago. \

Why this is big

This marks a turning point: robots are moving from being “tools that follow instructions” to “agents that plan and adapt.” The Gemini systems bring us closer to generalist robots that can operate in varied settings, rather than being locked to one domain. If scaled and refined, this could accelerate deployment in manufacturing, health care, home assistance, and more.